Provider or Deployer?

The obligations which apply to organisations under the EU AI Act depend heavily on how such organisations build or procure the AI technologies they use.

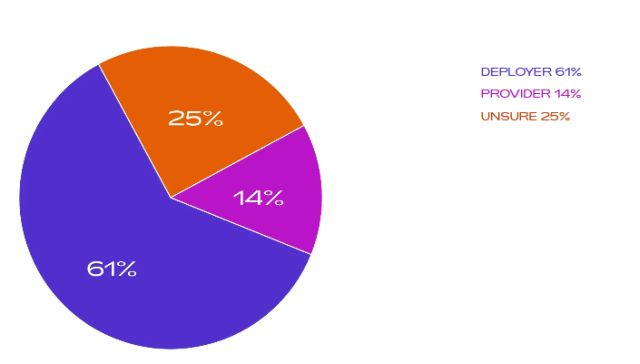

For those Providers who design and build proprietary AI systems or who sell AI systems to others, their regulatory burden is much greater under the Act than those Deployers who simply implement off-the-shelf solutions. In our survey, 25% of respondents remain uncertain about which category they fall into.

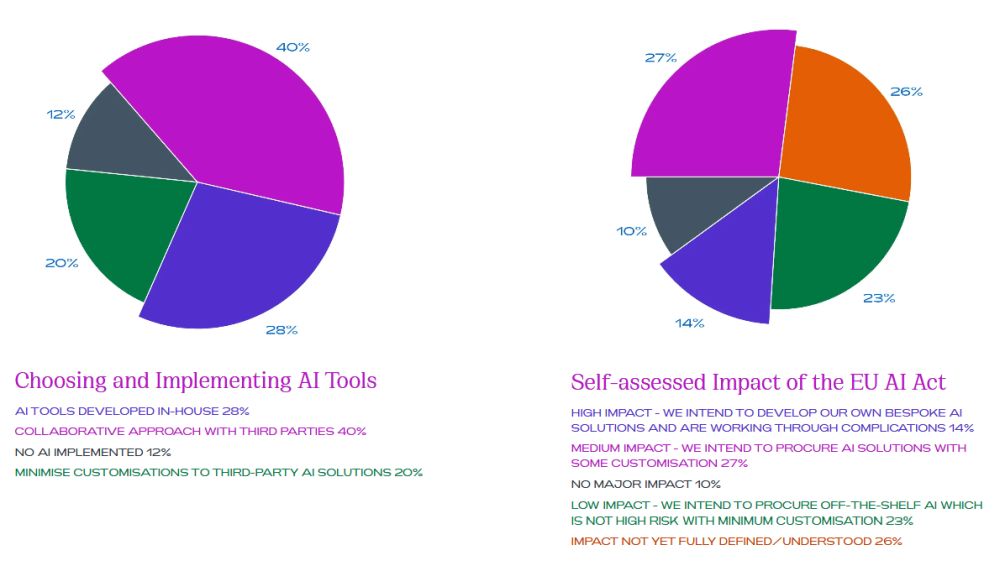

Furthermore, when asked about their approach to choosing and implementing AI tooling for their organisations, 28% of respondents confirmed that they are developing AI tools in-house while 40% of respondents confirmed that they were working collaboratively with third parties to create bespoke AI tooling.

How is your organisation defined by the EU AI Act?

It is important to note that under the EU AI Act, if an organisation significantly alters or tailors an AI tool or system to meet their own bespoke needs they may be recognised as the Provider of the altered system, and will need to comply with the more burdensome obligations which accompany this classification. As such, when we compare the results of our survey, it is clear that there are more than 25% respondents who do not yet understand their role within the AI ecosystem.

Misclassification can lead to significant compliance gaps, so whether you are building AI systems in-house or integrating third-party solutions into your operating model, knowing your correct designation under the EU AI Act for each AI system you build, adapt or use is the first step towards responsible and lawful AI use.

Level of Impact of the EU AI Act

The survey results show that many respondents may have underestimated the impact that the EU AI Act will have on their organisations.

In looking at the graphs below, we can see that while 28% of respondents confirmed that they will be developing AI tooling in-house and thus will have the greatest number of obligations under the EU AI Act, only 14% of respondents believe they will be highly impacted by the legislation. Similarly, while 40% of respondents are working closely with third parties to develop and modify AI solutions to meet their specific needs, only 27% believe they will be moderately impacted (medium impact) under the EU AI Act.

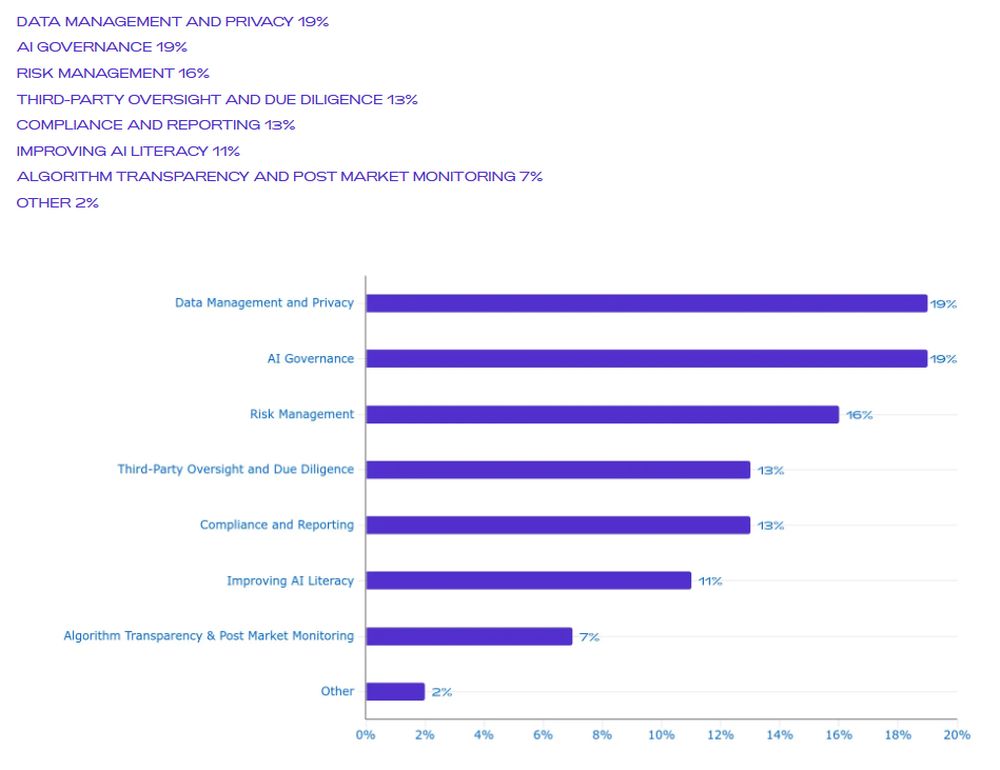

Key Cost and Resource Impacts of EU AI Act on Organisations

We also asked participants to identify the areas of the EU AI Act that are likely to have the biggest impact on their organisation in terms of cost and resources.

The data reveals that those surveyed believe that Data Management and Privacy (19%) and AI Governance (19%) will have the biggest impact, indicating that organisations will need to allocate substantial resources to ensure compliance in these domains. Organisations that already have regulatory requirements around data governance, such as banks and insurers, may well see a strategic advantage here.

This is followed closely by Risk Management (16%), highlighting the importance of identifying and mitigating AI-related risks. Third-Party Oversight and Due Diligence and Compliance and Reporting (both 13%) also represent considerable resource commitments, emphasising the need for thorough evaluation of external partners and adherence to regulatory requirements.

Improving AI Literacy is at 11%, but perhaps this area should be considered as a big-impact area in terms of cost and resources, as AI literacy is crucial for fostering a knowledgeable workforce capable of navigating AI technologies effectively. This need is underscored by the EU AI Act, which now mandates that organisations ensure all relevant stakeholders—including employees, contractors, and board members—possess sufficient AI literacy to responsibly deploy and oversee AI systems. As highlighted in our briefing on AI literacy, this shift transforms AI literacy from a mere educational goal into a legal obligation and strategic imperative.

This article contains a general summary of developments and is not a complete or definitive statement of the law. Specific legal advice should be obtained where appropriate.